NIME '18: Live Repurposing of Sounds

Audio Commons has had an important presence at this year’s NIME 2018 conference, which has been held at Blacksburg, VA, USA. Apart from the presentation of Playsound by Mathieu Barthet, the AudioCommons team has also presented another project in collaboration with the University of Huddersfield (FluCoMa project) and Georgia Tech in the hands of Gerard Roma and I. This collaborative project looks into real-time music information retrieval (MIR) applied to live coding using either local or crowdsourced databases (e.g., Freesound).

Figure 1. MIRLCRep demo video using the crowdsourced database Freesound.

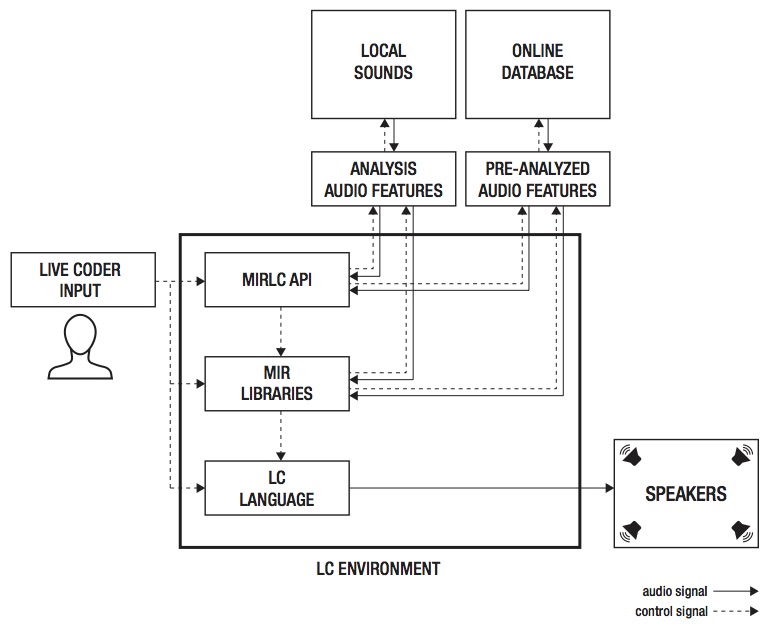

The novelty of our approach lies in exploiting high-level MIR methods (e.g., query by pitch or rhythmic cues) using live coding techniques applied to sounds. The system is built within the SuperCollider environment and the Freesound API. The content-based search capabilities of this API are based on the Essentia library for audio analysis and the Gaia library for content-based indexing. In the online case, access to the index is provided by the Freesound API, and the Freesound quark. In the local case, the Gaia index is wrapped by a web service running in the user’s machine. A corresponding quark named FluidSound is provided. Both components act as MIR client. The MIRLC layer provides high level access to content-based querying provided by both quarks, with a focus on live coding usage.

Figure 2. Block diagram of audio repurposing applied to live coding.

Visitors enjoyed trying the system and provided encouraging feedback for future steps to take: “Looks like a quick and easy way to make music on the fly”, “I think this is a great idea for making completely unique soundtracks for games/media on the fly. Great work!”, “Love the ‘similar’ function…. especially in building rhythmic patterns, seems like it could be a cool way to ‘progress’ through a piece by transferring the pattern through new similar sounds, maybe a way to end/drop off the first sounds could be cool so that it can travel”

In summary, the system raised interest among the visitors that came to see us. Several visitors expressed interest in using the system in their different domains (e.g., games, multichannel audio, soundscape design). Potential next steps of the project, including soundscape generation and the addition of filtering and patterning using SuperCollider facilities, were also discussed.

Figure 3. NIME 2018 poster.

Resources:

- This work was presented at NIME 2018 during a poster session on Wednesday, June 6, 2018: Xambó, A., Roma, G., Lerch, A., Barthet, M., Fakekas, G. (2018) “Live Repurposing of Sounds: MIR Explorations with Personal and Crowdsourced Databases”, Proceedings of the New Interfaces for Musical Expression (NIME ’18), Blacksburg, Virginia, USA, pp. 364-369.

- The poster presented during the demo is available here (PDF).

- The source code of FluidSound can be found here.

- The source code of MIRLC can be found here.

Acknowledgments:

We thank the participants who gave us feedback. FluCoMa is funded by the European Commission through the Horizon 2020 programme, research and innovation grant 725899. The icon from Figure 2 is “Female” by Wouter Buning from the Noun Project.