Abbey Road Hackathon

On the 10th and 11th of November 2018, the first Abbey Road Hackathon took place in the illustrious Studio One, challenging over a hundred brilliant minds in creating the next technologies for music making. Studio One is the world’s largest purpose-built recording studio, where film scores like The Lord of The Rings Trilogy, The Empire Strikes Back, and many more award winning compositions, have been performed and recorded. After spending 30 consecutive hours there, and possibly because of hallucinations triggered by sleep deprivation, you could almost sense all the wonderful hours of music that in the past have been filling the immense void above the thoughts of the attendees.

The first Abbey Road Hackathon. Video by Abbey Road Red.

Sharing Innovation

The Hackathon, organised by Abbey Road Red, the innovation department of Abbey Road Studios, was looking for audio developers, machine-learning experts, MATLAB, Max MSP and C++ heroes, Python gurus, and design thinkers to create projects inspired by the theme The Future of Music Creation. The event invited to answer questions such as: What will musical instruments of the future be like? What tools can we build to facilitate the creative process? How can we use technology to make music-making more accessible? Among the Tech and Strategic Partners figured Universal, Microsoft Cognitive Sevices, Miquido, Juce, WhoSampled, Chirp, Bare Conductive, Hackoustic, 7digital, Ambimusic, Volumio, Quantone, Gracenote, Cloudinary.

Abbey Road Studio One during the hackathon.

Audio Commons, which held a demonstrator and a presentation in Abbey Road Studio Two during the Fast Industry Day (which you can read about in this other post), received an invitation to partner for the imminent event, and we gladly accepted to offer resources, mentorship, and a price for the best hack employing Audio Commons resources. In preparation to the hackathon, we pushed a little bit further to build a series of examples that people could build upon. Filled with thrills for being part of such a great experience, we presented the aim and the latest outcomes of the Audio Commons Initiative, showing also in a short video SampleSurfer by Waves Audio, AudioTexture by Lesound (now available to download for free here), MuSST, the Music and Sound Search Tool by Jamendo, and Playsound.space by Ariane Stolfi.

The Audio Commons presentation captured with a 360 camera.

You can navigate the original 360 image here.

After introducing the project and the team, Audio commons offered to the participants the opportunity to access Creative Commons audio content from our latest version of the Audio Commons Mediator.

The resources offered consisted of an interactive search endpoint plus other software demonstrators, which can be explored at this page, developed by Miguel Ceriani, Sasha Rudan, Johan Pauwels, and Mathieu Barthet.

Below we present some projects which integrated the Audio Commons resources in their development.

the winner: xAmplR

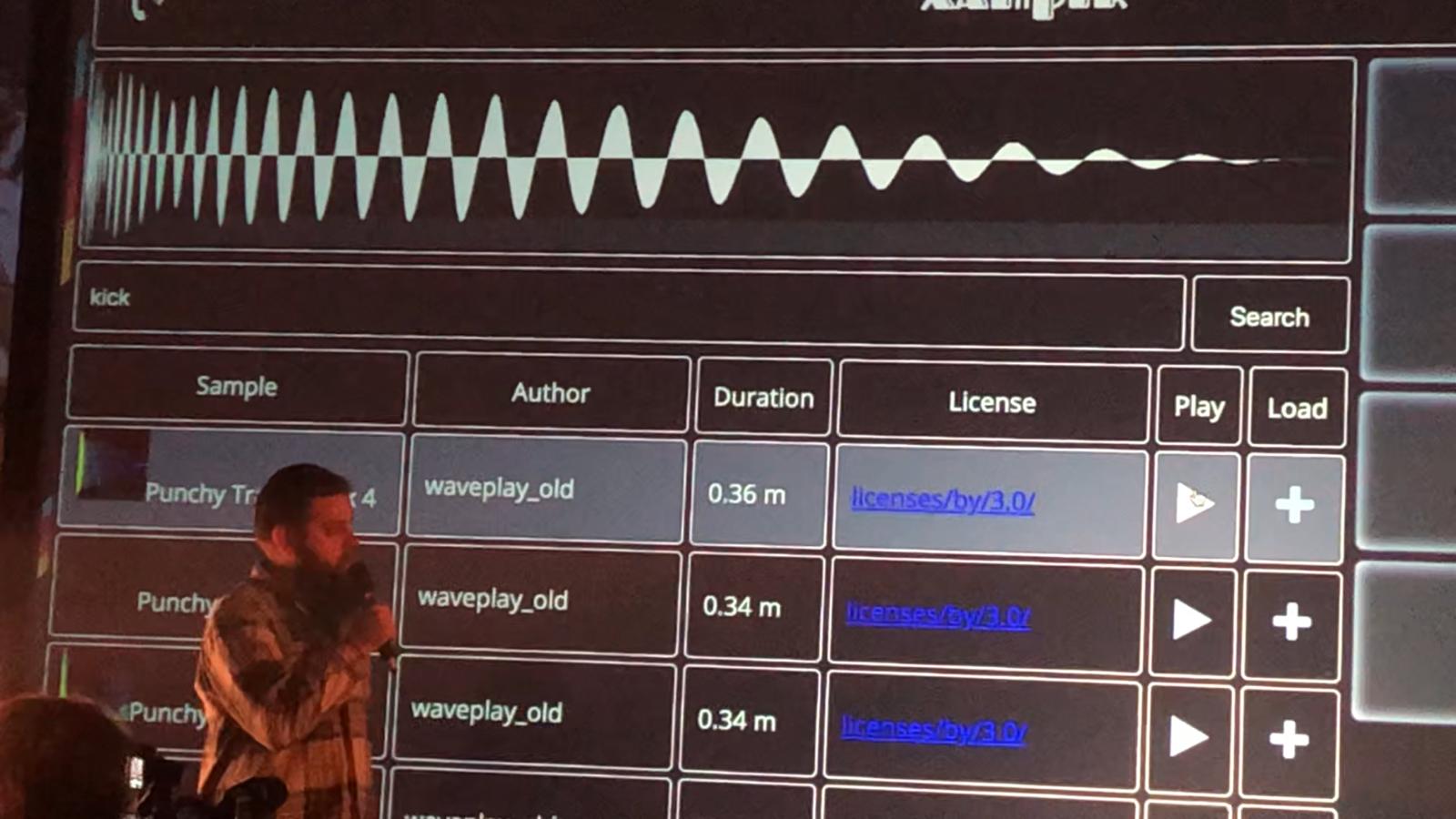

Developed by Alex Milanov and Adrian Holder, xAmplR is a browser based sampler that allows you to quickly and easily grab free audio samples from Audio Commons, that you can then trigger live using the 16 MPC styled midi enabled pads. Samples can be searched for either by keyboard input or Microsoft speech-to-text voice recognition. Once the search string has been received, xAmplR searches for sounds using the Audio Commons Mediator, and retrieves a series of results which can then be selected and sent to the virtual drum pad. Once the samples are loaded, one can just click the pads to play the samples.

xAmplR presented on stage.

Detail of xAmplR interface.

xAmplR demonstrated brilliantly how Audio Commons content can be searched, browsed and creatively repurposed, and the developers were awarded an the prize “Best use of Audio Commons”, receiving an Amazon Voucher. The original submission on devpost can be found at this link, and you can try yourself the interactive demo.

Play the Singer

Another project which creatively employed Audio Commons resources was Play the Singer by Table#7, a game running on Bela, the low latency platform for interactive audio developed by the Augmented Instruments Lab at Queen Mary University of London. Play the Singer is a game where Player#1, which we can call the Singer, has to avoid, by raising and lowering the pitch of the singing, obstacles lifted by Player#2, which can be called the DJ. Obstacles, appearing as blocks in the visual interface rendered in p5.js, correspond to the samples triggered by a sequencer running in a Pure Data (PD) patch. The PD patch is compiled to C++ code running on Bela thanks to the Heavy Compiler by Enzien Audio. Player#2, the DJ, can raise the volume of the samples with a MIDI controller, creating challenges for the singer, which has to sing at a higher pitch to overcome the obstacles. The DJ can also change the sample with the MIDI controller’s knobs.

Table#7 performing a demo of Play the Singer.

Table#7 working on Play the Singer.

Table#7 filming the demonstrative video. Picture by Archie Brooksbank (Abbey Road Red).

How does Play the Singer integrate Audio Commons?

The game, which you can find on github equipped with preloaded samples, also allows you to run a terminal command to search for 9 different keywords. The python code will search for these keywords into the Audio Commons resources and download 9 different samples with a suitable duration which can be used for the game, showing also the corresponding author and licensing details. A more detailed description about the project and the technology used can be found on the Devpost submission page here. Play the Singer is developed by Adan Benito, Mark Daunt, Delia Fano Yela, Beici Liang, Marco Martinez, Alessia Milo, Giulio Moro.

Table#7 presenting Play The Singer.

The next #RedHackers have created an interactive game you can control with your voice! #RedHackathon pic.twitter.com/20kUfHPHa1

— Abbey Road Red (@AbbeyRoadRed) November 11, 2018

Abbey Road Read tweeting about the presentation of Play The Singer.

Sonic Breadcrumbs

Sonic Breadcrumbs is a choose-your-own-adventure in physical space rooted in the physical world, which uses chirping beacons and a mobile web client to let you respond to triggers in the surrounding environment. Chirping audio beacons were used to detect spatial locality, with possible applications at home, in a museum or gallery, an enhanced location-aware audio tour, or for promenade theatre.

The resources offered by Audio Commons were found a simple way of retrieving sounds and bringing more life to the narrative in an automated way, where the narrative writer wouldn’t need to fuss about finding sound files themselves. The idea of the creators was to use keywords from the narrative to search for related sound samples of a suitable sound duration.

Sonic Breadcrumbs is developed by Valentin Bauer, Tom Kaplan, Frazer Merrick and Matt Timmermans.

Heres a working example of the web app, powered by @chirp and @p5xjs, and showing our Abbey Road Runner Game.

— Frazer Merrick (@frazermerrick) 11 novembre 2018

Just imagine the emitters were embedded within a tourist trail, museum exhibit or even an immersive theatre set! N.b it is possible to make the 'chirps' ultrasonic. pic.twitter.com/ibT7GxYiSr

A tweet on Sonic Breadcrumbs during the event.

The creators of Sonic Breadcrumbs mentioning Audio Commons on stage.

Acknowledgements

We would like to thank the sparkling and friendly Dom Dronska and the other organisers that brought this event to life, and all the attendees that created a pleasant atmosphere where so many ideas concerned with the Future of Music Creation were shared and discussed. The event catalysed much attention from the media, seeing a number of articles being published after the event, by Computer Weekly, Musically, and many others. You can find many more pictures and videos about the event looking for #RedHackathon on Twitter.

The Audio Commons Initiative is funded by the European Commission through the Horizon 2020 programme, research and innovation grant 688382.